They look so convenient...

When you are sitting in front of your computer, as a photographer or a retoucher, you are always trying to save time. Whenever you have the chance to automate tedious processes, you are more than willing to do it. That’s why everybody loves using keyboard shortcuts and Photoshop actions.

Since color can be a pretty time consuming matter, wouldn't it be wonderful to automate this process as well?

Adobe, Phase One, and third party creators advertise the use of presets like they are a true miracle: “Take your photo editing to the next level!”, “Create professional looks!”, “Speed up your workflow!”.

Problem solved, right?

So why are lots of professionals still dissatisfied by their work when it comes to color?

Roll the dice

“Color is the most relative medium in art. Every perception of color is an illusion. In our perception they altern one another.” - Josef Albers

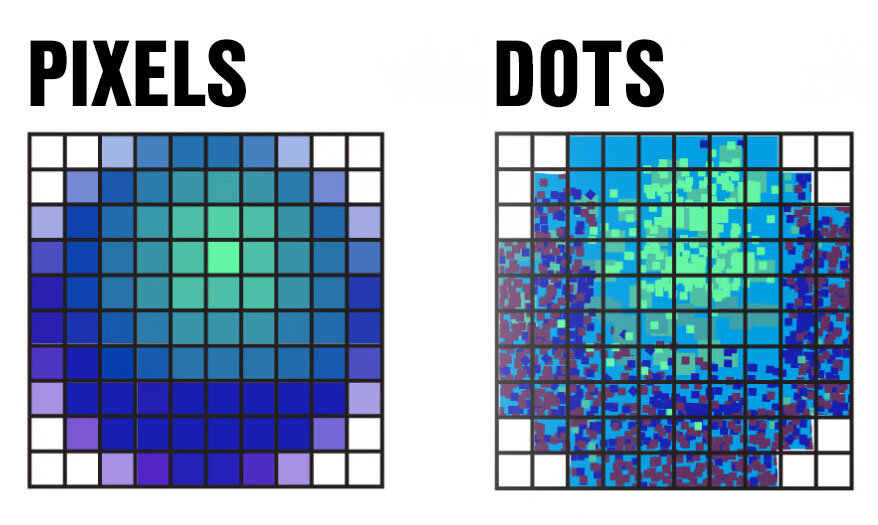

There are certain things that we cannot just delegate to a piece of software yet. It is just too complex, especially if we hope to achieve repeatable and reliable results.

Using presets isn’t bad per se, but always relying on them can be very detrimental for three main reasons:

As a photographer, you are convincing yourself that color is something that needs to be addressed after the images have already been taken.

Images from the same set can potentially look very different since presets don’t take into account the variations that happen throughout a shoot (different light, different colors, different clothes, different elements in the background).

There is no thought process involved from your side. You are just rolling the dice, hoping that something interesting is going to happen.

Example

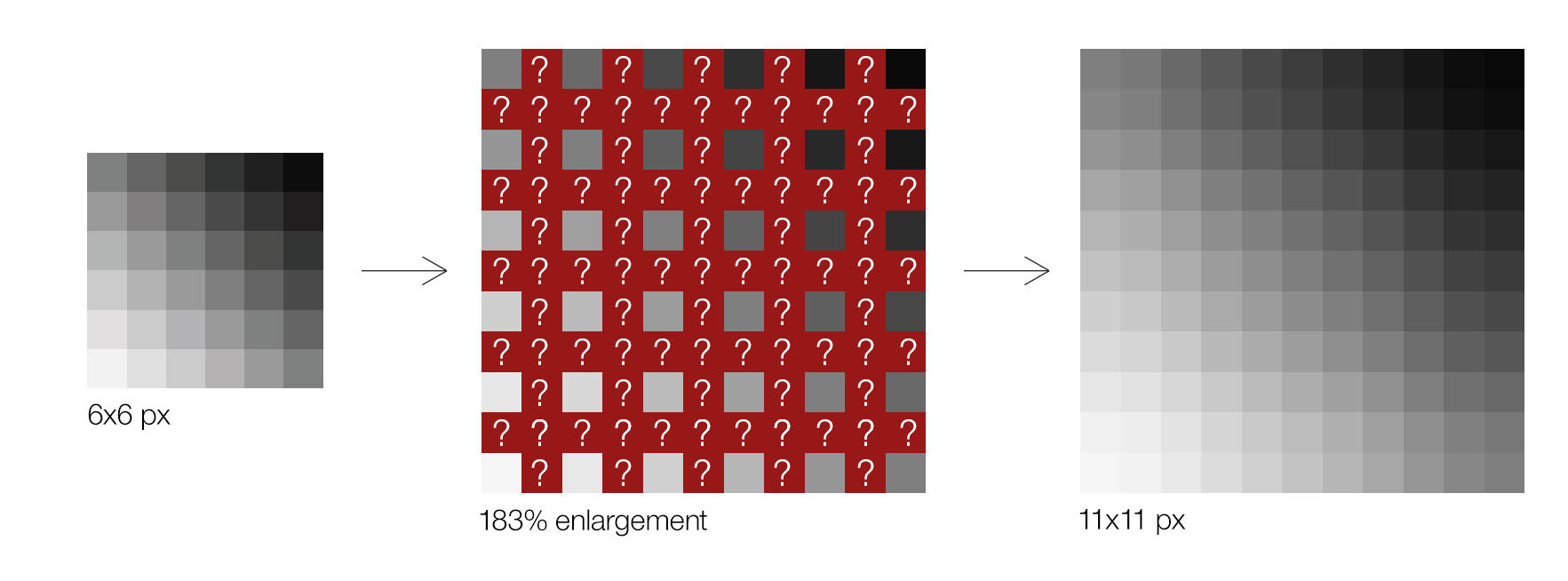

Let’s assume that the look of image A has been achieved using a preset. Then the same adjustments have been used on image B that had been taken in the same venue but at a different time.

It’s pretty clear that the result is very different and, as they are now, image A and image B cannot work together nicely. If in image A it was perfectly fine to add warmth to a particular shade of blue/magenta, in image B the effect is just too much. Using the same “preset” just won’t work, that’s why image B requires a manual match using some masks and curves.

Learn from them instead

Over time, the result of depending on these automations creates a compound effect that won’t allow you to grow.

I know it because this is what I did for a very long time.

If you want to use presets, do it the smart way.

Use them as a tool to study color theory.

If something looks very nice with a specific preset, try to understand why. Which element has been influenced the most by the preset? The overall contrast? A specific color? Which color harmony is it creating?

Books you should read

If you are interested, I wrote a guest post on Mareike Keicher’s blog a while ago. I listed the best books for photographers and retouchers that I have read. The topics discussed in these books can be beneficial if you want to be more deliberate and confident when it comes to colors and visual arts in general.

Remember to also check out her work on her website.